I just watched a perfect case study on Twitter in why we’re drowning in conspiracy theories unfold in realtime.

A large verified account posted a viral thread claiming “leftists” and “radical Muslims” have formed a secret alliance to Destroy Western Civilization™. Capital D, capital W, capital C. The thread racked up millions of views and tens of thousands of likes before lunch.

My response? A boring, reasonable explanation: maybe it’s just fringe progressives misapplying their “protect minorities from discrimination” principle to people who actually deserve criticism and legal consequences. No secret cabal required. Just well-intentioned people making predictable mistakes.

The result? Crickets. A handful of views. Zero engagement.

And that tells you everything you need to know about why reasonable explanations die while conspiracy theories thrive.

The Engagement Trap

Here’s what actually happened, and it’s playing out thousands of times daily across every social platform.

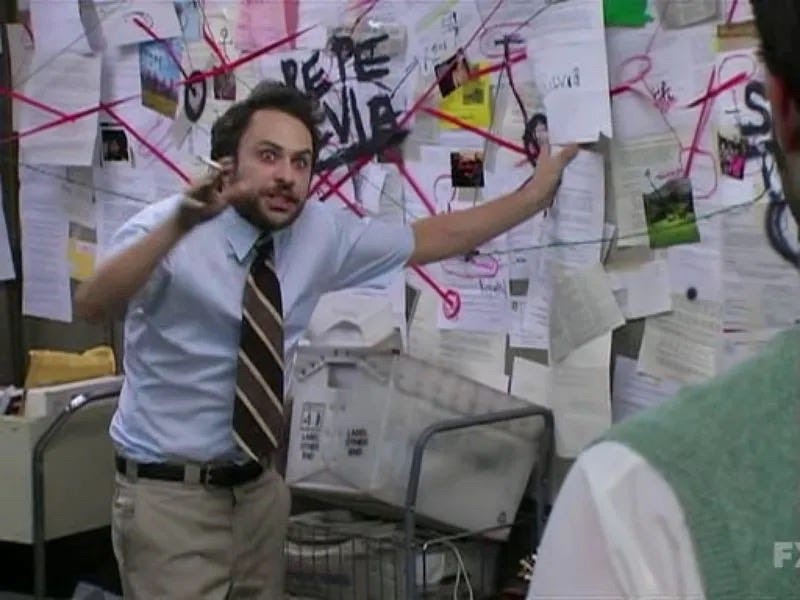

The conspiracy theory had all the elements social media algorithms crave: apocalyptic language (“destroy everything,” “it’s now or never”), simple good-versus-evil framing, and that delicious feeling of being part of an important struggle against dark forces. It made people feel urgent and important and tribal. Share this now or civilization dies.

My response had none of that. It acknowledged complexity. It offered a simpler explanation that didn’t require secret coordination between groups that actually hate each other. It suggested that maybe, just maybe, the world is messier and more boring than the conspiracy suggests.

The algorithm looked at my post, saw nobody was getting angry enough to engage, and buried it.

This is how truth dies. Not dramatically, but quietly, algorithmically, in the noise.

The Same Pattern, Different Victim: Dundee’s “Braveheart”

Last week, the exact same dynamic played out with a knife incident in Scotland. A twelve-year-old girl was charged with possessing weapons, and within hours the story exploded across social media with a very specific narrative.

The viral version cast her as “Sophie Braveheart,” a young Scottish girl defending her little sister from migrants trying to sexually assault them. Right-wing accounts framed it as evidence of inevitable civil war and societal collapse. Elon Musk shared it to his 225 million followers. The engagement was tsunami-like.

The boring reality? Police found “no evidence to substantiate claims being made online the youths were at risk of sexual assault.” Officials specifically warned against “speculation and misinformation surrounding the incident.” It turns out a Bulgarian couple—legal immigrants with an eight-month-old baby—may have been the actual victims of harassment.

But the police statement got buried in local news. The inflammatory version with civilizational stakes got the algorithmic rocket fuel.

Once again: conspiracy travels at the speed of rage. Truth limps along behind, carrying receipts nobody wants to read.

Why Algorithms Love Conspiracy Theories

Social media platforms optimize for one thing: engagement. And conspiracy theories are engagement crack cocaine.

They validate existing fears. They create urgent tribal solidarity. They provide clear, identifiable enemies. They make people feel like they possess special knowledge that the sheep don’t understand. Every psychological button that makes humans share content, conspiracy theories press simultaneously.

Meanwhile, nuanced explanations make people think “Huh, I guess that makes sense” and scroll on. No shares. No furious comments. No algorithmic rocket fuel.

The platforms literally reward the most inflammatory, divisive content because that’s what keeps people glued to their screens. Truth becomes secondary to emotional impact. And we wonder why we can’t have reasonable conversations anymore.

The Strawman Strategy

The conspiracy thread worked by creating cartoon versions of progressive positions. “Drag queen story hour for kids” became some core leftist priority when it’s a niche cultural program most people don’t have strong opinions about. “Speech codes to protect feelings” reframed concerns about harassment and discrimination as mere emotional fragility.

These caricatures make conspiracy theories much easier to construct. When you’re arguing against strawmen, almost any explanation seems plausible. Why do leftists defend radical Islam despite their contradictory values? Well, they don’t, actually—most progressives distinguish between defending ordinary Muslims from discrimination and defending terrorist organizations. You can simultaneously support Israel’s right to exist, be horrified by Hamas terrorism, feel concern for Palestinian civilians, and oppose policies that harm innocent people.

This isn’t binary. But nuance doesn’t fit the “civilization under attack” narrative that generates clicks.

When Facts Don’t Matter

Here’s the even darker layer: it’s not just that nuanced explanations fail to compete. It’s that a growing segment actively rejects facts when they contradict The Narrative.

Take the Dundee case. Even after police explicitly stated they found no evidence of the harassment claims, the blood-and-soil crowd doubled down. They weren’t interested in what actually happened. They wanted the story of innocent Scottish girls being terrorized by migrants because it served their purposes.

This isn’t accidental. There’s a deliberate strategy among certain influencers to manufacture and perpetuate fear—fear of immigrants, fear of demographic change, fear of civilizational collapse. Facts that contradict these narratives aren’t just inconvenient. They’re actively unwelcome.

When your entire political identity depends on civilizational crisis, boring police reports saying “we investigated and found nothing” become the enemy. The fear is the point. The outrage is the product. And social media algorithms are the perfect delivery system.

The Visibility Death Spiral

Here’s the really insidious part: reasonable responses don’t just get ignored—they get actively suppressed.

The algorithm sees low engagement and decides the content isn’t “interesting.” So it shows the post to fewer people, generating even less engagement, triggering even less visibility. Meanwhile, the conspiracy theory keeps getting pushed to more feeds because it’s generating reactions.

The people who might benefit most from seeing measured, thoughtful analysis—those initially drawn to conspiracy thinking but still persuadable—never see the counterarguments because they get filtered out before reaching their feeds.

We’ve created information environments where the most extreme voices get the biggest megaphones, reasonable people self-select out of toxic conversations, and corrections spread at a fraction of the speed of the original misinformation. Police statements warning about misinformation get buried while the misinformation itself goes viral.

The Real Alliance

If there’s any alliance worth worrying about, it’s between social media algorithms and extremist thinking. Not because tech companies want extremism—though follow the money and draw your own conclusions—but because they want engagement, and extremism happens to be highly engaging.

The platforms have created information environments perfectly optimized for conspiracy theories and perfectly hostile to truth. Whether it’s secret cabals plotting civilizational destruction or turning a mundane weapons charge into a symbol of The Fall of Western Culture from Invasive Colonizers Antithetical to Civilization, the pattern is identical.

Apocalyptic framing gets rewarded. Nuance gets buried. And we all get dumber and angrier in the process.

What Actually Works (Spoiler: It’s Boring)

The reality is that democratic societies function through constant negotiation between competing values and interests. Most people want safety and prosperity for their families. Social change happens gradually through institutions, not secret alliances plotting civilizational collapse.

But “incremental policy debates within democratic frameworks” doesn’t generate clicks. “Different groups sometimes disagree on priorities” isn’t going viral. “Most problems are complex and require nuanced solutions” won’t trend.

The stuff that actually makes societies work—compromise, good faith debate, acknowledging complexity—is algorithmically invisible.

Breaking the Cycle

So what do we do about this?

Question binary thinking. Recognize that the most engaging content is often the least accurate. Seek out sources that occasionally bore you with nuance. When you see a viral thread claiming secret alliances are plotting to destroy civilization, ask yourself: what’s the simpler explanation?

Usually, it’s much more boring than the conspiracy theory suggests. And boring explanations don’t get algorithmic love.

But maybe that’s precisely why we need more of both—boring explanations and people willing to share them even when they don’t feel urgent, even when they generate no engagement, even when the algorithm buries them.

Because the alternative is information environments that reward conspiracy theories and bury truth. And we’re watching in real time what that does to democratic discourse.

Unfortunately, nuance and accuracy don’t trend on social media. But if we want information environments that support democracy instead of undermining it, we need to flood the zone with truth anyway.

Even when it’s boring. Especially when it’s boring.

The algorithm wants you angry. Don’t give it what it wants.

Discover more from The Annex

Subscribe to get the latest posts sent to your email.

6 thoughts on “Secret Cabals, False Heroines, and the Death of Truth and Nuance”