🚨 BREAKING: There may be another explanation for my suppressed reach on X. The plot twist? It doesn’t make this fucked up situation any better. In fact it might just be worse…

On October 18, 2025, I forced 137 bot and suspicious accounts to unfollow my X account. I’d noticed my reach declining over the previous weeks and used a third-party audit tool to identify fake accounts degrading my audience quality—every social media marketing guide tells you to do this, clean your follower base, remove fake engagement, optimize for authentic interaction. Then my impressions dropped 75% overnight.

And they stayed there.

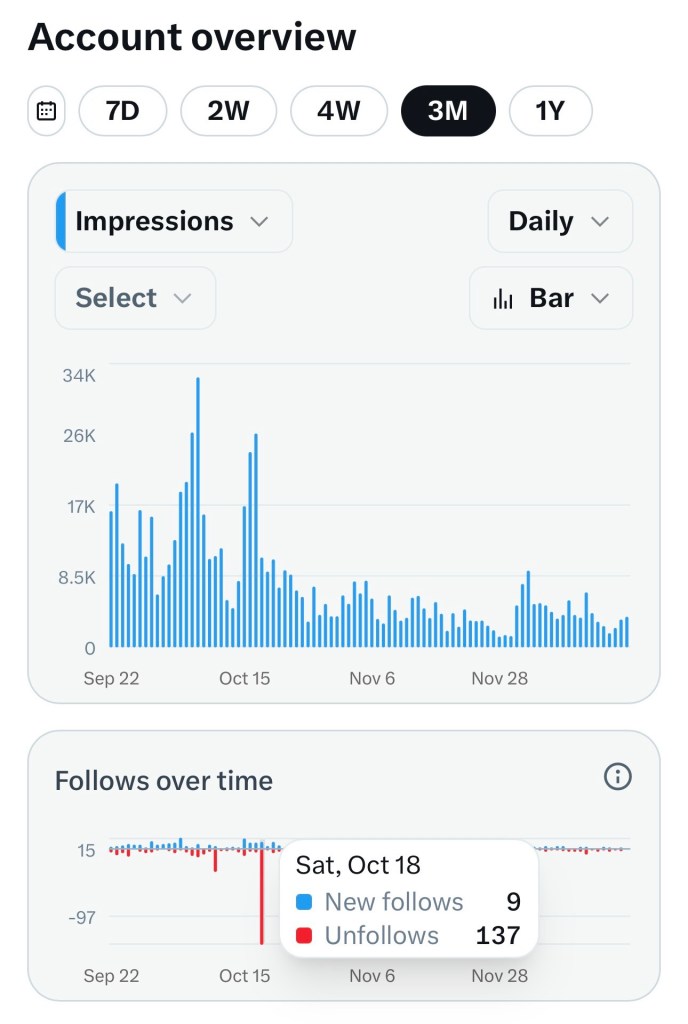

Just look at this:

My impressions, which had been running 15,000-25,000 daily, dropped to under 5,000 and have stayed there ever since.

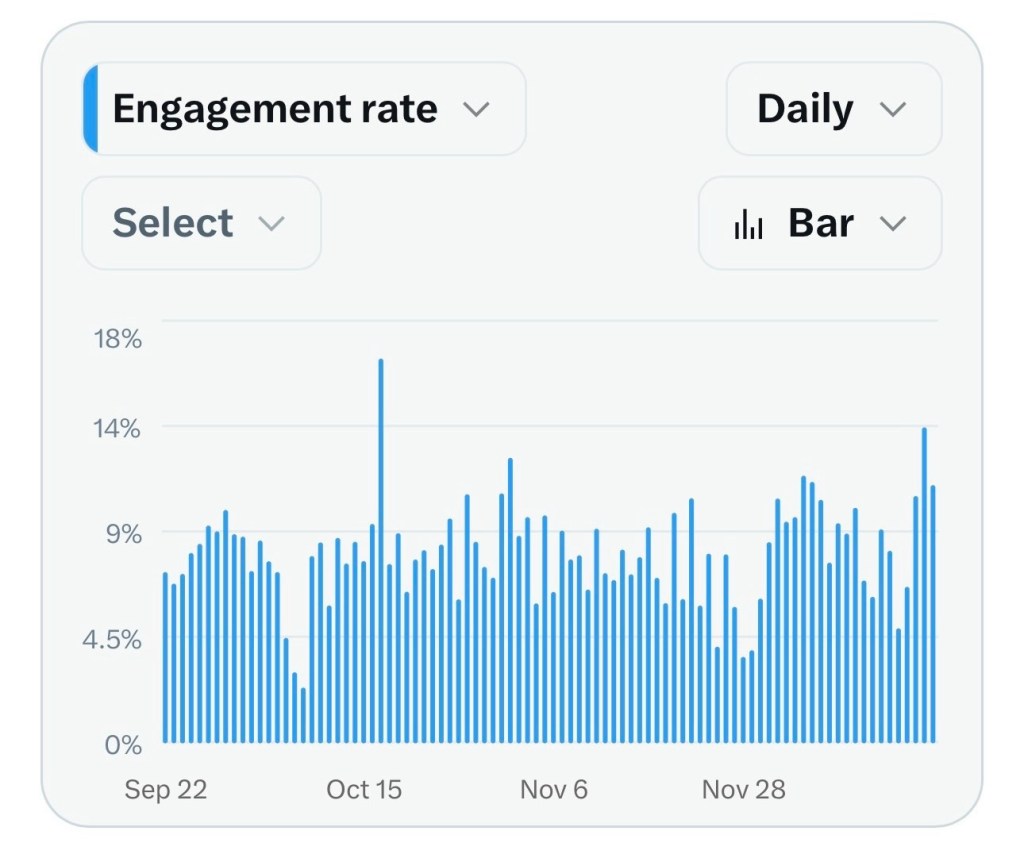

Meanwhile, my engagement rate—the percentage of people who actually interacted with content they saw—has held steady at 7-14% before and after (and that’s pretty damn healthy engagement, not incidentally). The people still seeing my posts are real and engaged and spectacular.

The algorithm just stopped showing my posts to 98% of them.

I don’t know with certainty that the bot purge caused the shadowban. I’ve been the victim of mass-reporting harassment before after all. And it’s not like X sends notifications explaining why your reach collapsed 75% overnight. But the timing is precise, the correlation is exact, and the pattern matches what other creators report when bulk follower changes trigger algorithmic flags. It’s the best hypothesis I’ve been able to come up with given the data.

I did everything the platform claims to want, and the algorithm fucked me for it.

X’s algorithm monitors for abnormal follower loss as a signal of potential account health issues—spam behavior, declining content quality, organic user rejection. The system doesn’t appear to distinguish between people leaving because your content sucks and you proactively removing fake accounts to improve audience quality. From the algorithm’s perspective, 137 unfollows in a single day triggers red flags regardless of which side initiated the action, and the platform treats bulk unfollow events as bot-like behavior, potentially resulting in shadowbans lasting weeks or months according to multiple creator reports and platform behavior analysis. When you remove bot accounts in bulk—even if they were already degrading your metrics through inflated follower counts without real engagement—the algorithm seems to interpret the sudden drop as evidence of account problems. You get the same penalty as someone whose audience is genuinely abandoning them, because the platform can’t tell the difference between audience rejection and audience curation—or won’t. Building intent-detection into the algorithm is technically possible; distinguishing between 137 accounts unfollowing you and you soft-blocking 137 accounts is a solvable problem. Trivial even. But I guess solving it doesn’t serve the engagement-optimization imperative.

Either way, you eat the penalty.

Normal bot removal might cause a brief recalibration dip of a week or two as the algorithm adjusts to your cleaner audience, but mass removal—even when it’s legitimate platform hygiene—looks like abnormal activity. The three-month shadowban window starting October 18 would put potential recovery around mid-January 2026. I guess we’ll see what happens then. If I’m still around. I’d already kind of given up on X as a marketing channel anyway.

Platforms regularly tout their commitment to authentic engagement and platform integrity. X periodically purges fake accounts and positions itself as fighting bot networks and spam—the company’s own policies explicitly prohibit “inauthentic engagement” and encourage users to maintain genuine audiences. But the algorithm doesn’t know what the policy says. When creators do that work themselves—proactively auditing their audiences and removing the same bad actors X claims to oppose—they get flagged and suppressed by the same system that’s supposed to reward authentic behavior. X’s stated values and X’s algorithmic incentives are in direct conflict: the policy team writes guidelines promoting audience hygiene while the engineering team deploys pattern-matching that penalizes it. The observable result suggests the system rewards inflated metrics over audience quality: keeping bots maintains your algorithmic reach even as they slowly degrade your engagement rates, while removing them triggers immediate penalties despite improving your actual audience quality and long-term metric health. Two bad options present themselves—tolerate fake followers to maintain reach, or optimize for real engagement and accept platform punishment—and there’s no path where doing the right thing benefits you algorithmically.

This creates what appears to be a structural incentive to leave your audience polluted. The cleaner your follower base, the more the algorithm suspects manipulation; the more bots you tolerate, the better your distribution—at least until the platform decides to purge them itself, at which point you absorb the penalty anyway but without having chosen the timing. Even the recommended mitigation strategy reveals the problem: avoid drastic changes in activity patterns to prevent triggering algorithmic flags, which is to say, clean your audience slowly enough that the algorithm doesn’t notice you’re doing platform hygiene. The system punishes efficiency and rewards opacity, optimizing for metrics that don’t map to actual value—follower counts, impression volume, engagement signals regardless of quality or intent. It can’t differentiate between authentic community building and metric gaming because it’s measuring the wrong things, and when the numbers look identical, the system defaults to punishing anomalies rather than rewarding quality. Harassment accounts that hate-follow you generate engagement signals the algorithm counts as positive interaction, and removing them costs you those signals. The platform’s engagement metrics don’t distinguish between constructive conversation and coordinated harassment, because both look like engagement to the algorithm.

You’d think AI could solve that, right? It’s not like X doesn’t have its own damn LLM that rhymes with cock.

Which is what I’m getting straight up the—

Ahem.

Anyway, this isn’t just about one shadowban or one platform’s broken incentive structure—it’s about the fundamental dependency relationship between creators and algorithmic distribution. You can build a following, create content, develop an audience, but you don’t own any of it. The algorithm decides your reach, platform policy changes can tank your distribution overnight, and cleaning your own audience can get you penalized as severely as violating terms of service. You’re optimizing for metrics the platform controls, under rules the platform can change unilaterally, with no recourse when the system makes mistakes. When a platform’s incentive structure actively discourages the behavior it claims to want, you’re not building a sustainable audience—you’re renting attention at terms that can change without notice.

Email lists don’t shadowban you for list hygiene. Your website doesn’t penalize you for removing spam accounts. RSS feeds don’t throttle your distribution because you blocked bad actors. Owned channels give you actual control over audience access and quality optimization, while platform reach functions as a marketing channel rather than an asset—it can supplement owned audiences, but it can’t replace them. Every creator depending on algorithmic distribution for their primary audience is one automated flag, one policy update, one platform recalibration away from losing reach they spent years building.

Consider me a case study in why you can’t trust platforms with your audience.

Instead, build where you own the ground.

Discover more from The Annex

Subscribe to get the latest posts sent to your email.

One thought on “I Optimized My Audience for Quality, and X Screwed Me for It”